Artificial intelligence has been around in some form for decades, but the sudden advent of generative AI applications has pushed federal organizations to think more intently about it. In fact, more than thinking about it, many agencies have adopted AI or are experimenting with it. Several have established offices devoted to it and policies to regulate how they use it.

You have no doubt heard most of the cautions connected with AI—the potential for biased and unreliable outcomes, and loss of privacy to individuals whose data an AI algorithm ingests. You can also expect pushback from line employees who worry about losing their jobs to AI tools.

Managing these risks will come naturally to organizations that start their AI journey as an organizational change management initiative rather than a technology project. AI will change organizations for sure. It alters the traditional relationship between people and the technology with which they interact; indeed, it changes people’s jobs.

Moreover, in a well-structured program, AI changes the connections among program managers, line staff members and the information technology staff. That’s because many of the best ideas for applying AI can—and should—come from line staff. In a sense, they become the drivers of AI initiatives, and remain involved as needed iterative changes get handed over to the DevSecOps teams who write code. Data ownership, too, blurs because AI applications tend to draw data from multiple domains.

Determine the why of AI

Whether generative or algorithmic and trained by your own agency data, or any other form of machine learning, any agency’s best approach to AI is determining why it’s needed. What benefit do you hope to gain, and how will that advance mission delivery or efficiency and economy of agency operations?

When all the agency functions potentially affected by AI get involved in setting the list of priority use cases, the change management part of AI will come more naturally. Each group—IT, mission, the line employees, even HR and finance—will better understand its own role in connection to AI, and at an early stage. That early understanding will lead to wider buy-in.

Three categories of use cases have emerged in the here-and-now era of AI. Two of them are promising:

- “Chatting” using the agency’s own data. Such an application might summarize all the data an agency has on a particular topic or suggest courses of actions based on more data than any individual would be aware of or able to inculcate. Many federal executives hope AI can help them cut through the digital noise to know what they really need to know. This group of applications also includes tools to automate necessary but tedious and repetitive tasks such as job listings, contract writing and creating training data sets for AI itself.

- Citizen services, using AI to boost the agency’s ability to deliver individualized services or answers to questions.

The third application in which many agencies are interested is more problematic: Research-based document creation using generative AI.

The generative programs now available can produce grammatically pristine and seemingly thoroughly researched documents, but the content of those documents is often incorrect. Limited in the data they can draw from, and lacking reliable discernment, the AI can incorporate erroneous claims of fact, deviations from important policy or regulation or outright fabrications into its output.

Some industry estimates put the draft accuracy at an average of 80%. Consider generative AI programs as aids to create first drafts, with expert analysts ready to review and correct the results. Rather than replacing analysts and writers, AI makes them both more crucial and more efficient.

Guidance for guardrails

However you deploy artificial intelligence—or even if you choose not to—you must maintain vigilance against AI aimed back towards your agency. AI can easily produce counterfeit bids to solicitations, essays from job applicants and grant applications, to name just a few potential traps.

Consider costs carefully. AI can help you cut costs, but it is not free to design or implement. In planning, be sure to account for the development and infrastructure costs, such as additional cloud services AI will require.

Finally, be sure you deploy what has come to be called explainable AI. In the public sector, more than any other, it is crucial to know—and be able to articulate—how and why the algorithm produced its result. This knowledge will speed up the continuous improvement in your AI deployment and ensure its acceptance by those you want it to benefit.

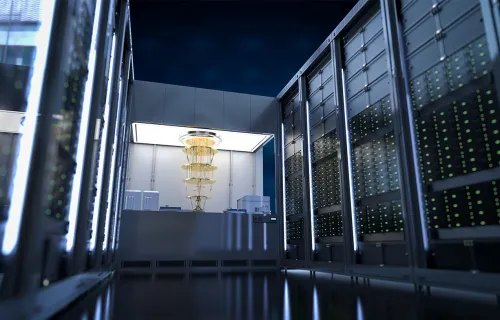

For another perspective on a key emerging technology, read "It's not too soon to think about quantum computing."