Käytämme omia ja kolmannen osapuolen evästeitämme sujuvan ja turvallisen verkkosivuston tarjoamiseksi. Jotkut ovat välttämättömiä verkkosivustomme toiminnan kannalta ja ne on asetettu oletuksena. Toiset ovat valinnaisia ja asetetaan vain suostumuksellasi selauskokemuksesi parantamiseksi. Voit hyväksyä kaikki, ei yhtään tai osan näistä valinnaisista evästeistä.

In this article we will learn about Azure ML Pipelines and how adding ML pipelines capabilities to your development cycle can provide better insights to developers in design, implementation and deployment of an end-to-end advanced analytics solution. It doesn’t matter if you only need to run a single python/R script or bunch of complex analytics task in your project, ML pipelines will make your development cycles easy and efficient.

We will first explore why use ML pipelines in the first place and then provide sample code that will get you started building and deploying your own analytics workflows. This article is aimed towards developers, ML engineers, Managers who work on cloud analytics solutions or want to know more how they can add cloud capabilities to their projects.

Understanding the Machine learning Development Lifecycle

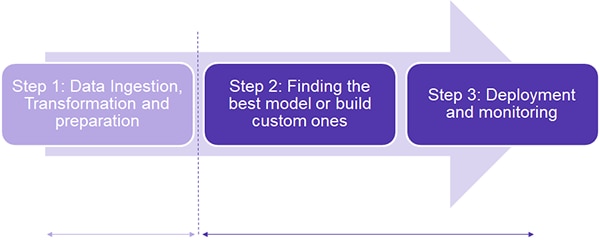

On a higher level, a machine learning lifecycle consists of 3 stages, 1) gathering data and pre-processing, 2) finding the most suitable models and 3) testing, deployment and monitoring of the solution. It is normally considered a good practice to write modular code for each of these different stages to have higher code reusability and save compute resources. Debugging each of the modules becomes easier and re-running compute-heavy processes can be avoided (very often data preparation and cleaning can take a lot of compute, but the outputs of the stage can be saved for reuse).

What are Azure ML pipelines?

Azure ML pipeline is a standalone executable workflow of a complete end-to-end machine learning task. It is not to be mistaken that it is only capable of performing machine learning tasks rather it provides structure to your development lifecycle for any advanced analytics solution. Not only you can use the Azure ML designer to design automated ML deployment services just by using your own data, but it also has full integration with the Azure Machine Learning Workspace where you can build your own custom models suitable for your business.

A pipeline can be considered a collection of modular scripts written to complete a small and specific task. For example, the 3 stages of a standard machine learning development cycle can be encapsulated within the pipelines as 3 steps:

1) data preparation and pre-processing,

2) model building and

3) deployment and testing

Considering each of the steps might have different requirements for compute resources, we can define and use compute configuration for each discrete step. For instance, one could assign a compute cluster with 16GB of memory for step 1 and configure a powerful cluster with GPU capabilities for the model training step. The most important feature of ML pipelines is the concept of ‘reuse of steps’. Imagine you have a computer vision task at your hand and the first step consists of data pre-processing (Example, using few OpenCV functions. to manipulate images). Let’s say we have some bugs in the training script. When you fix the bugs and rerun the pipeline, the pipeline run retrieves the output of the previous run instead of rerunning the pre-processing step again, hence saving considerable use of compute resources. According to Mr. Santosh Pillai, Principal Program Manager Microsoft Azure Team, enterprises can save up to 30 % compute cost using this feature of ML pipelines, a substantial amount of cost saved during the development cycle.

In the next pipeline run, the computer starts from Step 2 onwards as output from Step 1 can be reused

Conclusion: Why use Azure ML pipelines?

- Modular Code Structure: Easy to reuse, debug and maintain.

- Reuse of Steps: Save around 30% compute costs during model development process.

- Run containerized Python/R projects on Azure: The OS and the dependencies can be isolated for each pipeline run, allowing data sharing capabilities within the runs as well.

- Separate compute resources for separate tasks.

- Provides a visual toolkit to design, monitor and publish analytics workflow.

- Easy implementation of serving ML prediction Rest endpoints directly from the ML Model.

The next section provides code sample for running a demo ML pipeline for an Iris dataset classification task.Building and running your first Azure ML pipeline: Iris Classification

Pre-requisites:

- Create an Azure Machine Learning workspace to hold all your pipeline resources.

- Configure your development environment to install the Azure Machine Learning SDK, or use an Azure Machine Learning compute instance with the SDK already installed.

- Download Iris dataset in .csv format.

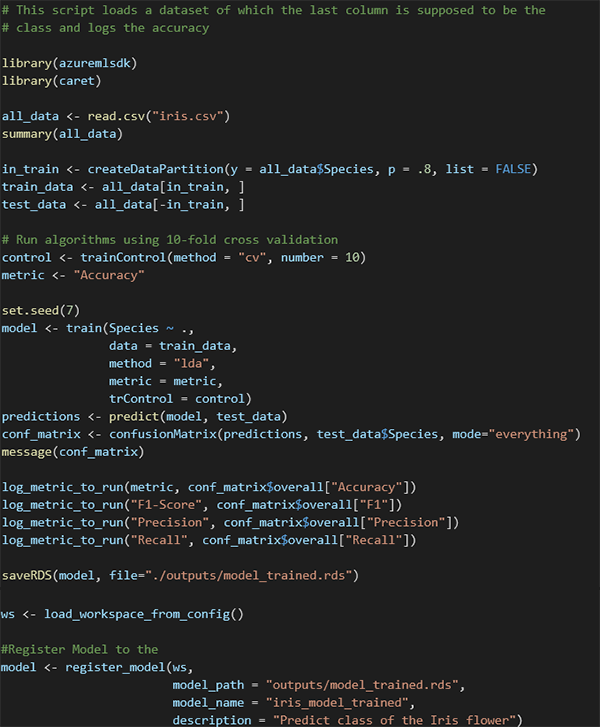

Create train.R, Sample script to train Iris classification model using R:

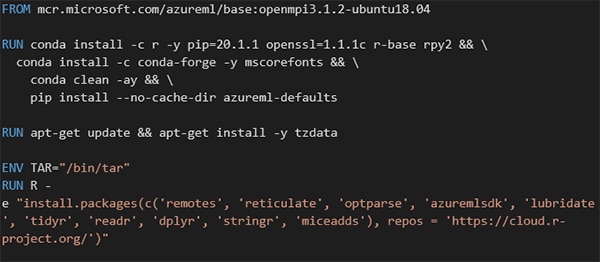

Create Dockerfile.txt, to install project specific packages:

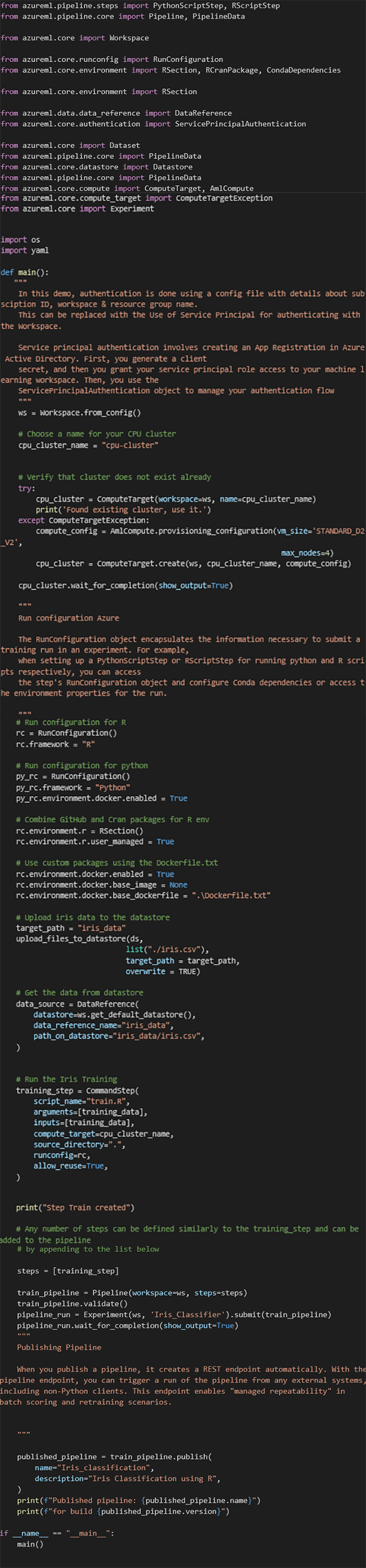

Create a pipeline.py file, the script that runs the ML pipeline:

Run pipeline.py from the command line, if everything works well then you will be able to see a new Experiment in the Azure ML workspace, ‘Iris Classifier’ and monitor the pipeline run from the workspace.

For any queries or more details, feel free to contact me.

***