This blog is about how to create data pipeline from API (source) to Redshift (destination) with AWS Lambda & AWS Glue & AWS S3.

AWS Lambda enables running your code without provisioning or managing servers.

With Lambda, uploading your code to the service makes it easy just to focus on your code.

You can write Lambda functions in your favorite language such as Node.js, Python, Go, Java, and more. Lambda is great for short running jobs and its ease of use makes it very handy. Limitations to be taken into account when using Lambda are max running time for jobs 15min and max working memory 10240mb.

AWS Glue is a serverless data integration service that makes it easy to discover, prepare, and combine data for analytics. AWS Glue provides both visual and code-based interfaces to make data integration easier. AWS Glue has good features when you need to reload all data or modify names to the pipelines. AWS Glue also keeps records of loaded data and wont duplicate already loaded data.

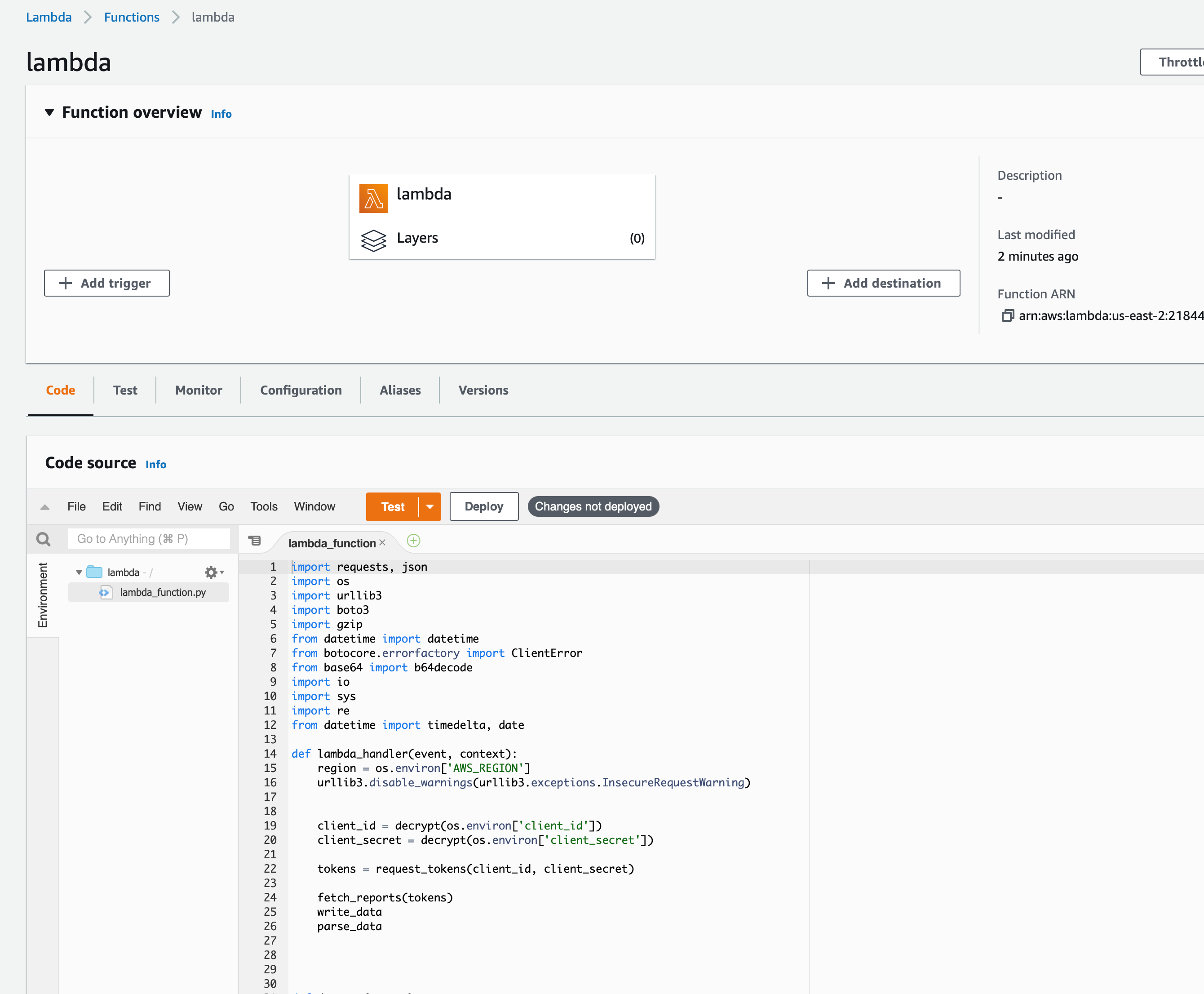

Getting started with the pipeline AWS Lambda

First task is creating AWS Lambda function that connects to API and then starts fetching the data.

Things you need adjust in Lambda are:

- General configuration memory and max running time

- Trigger when is the job launched

- (Layers) if using request

- (Environment variables) encrypted credentials

There is not a data source that is perfect only in tutorials you see rainbows and unicorns and wont therefore need some transformations/processing. In this pipeline we are transferring the files to AWS S3 after fetching and processing data. Creating a good naming convention is recommended and will pay off later in the process when you set up the AWS glue, this also helps to manage and see what files we have in AWS S3.

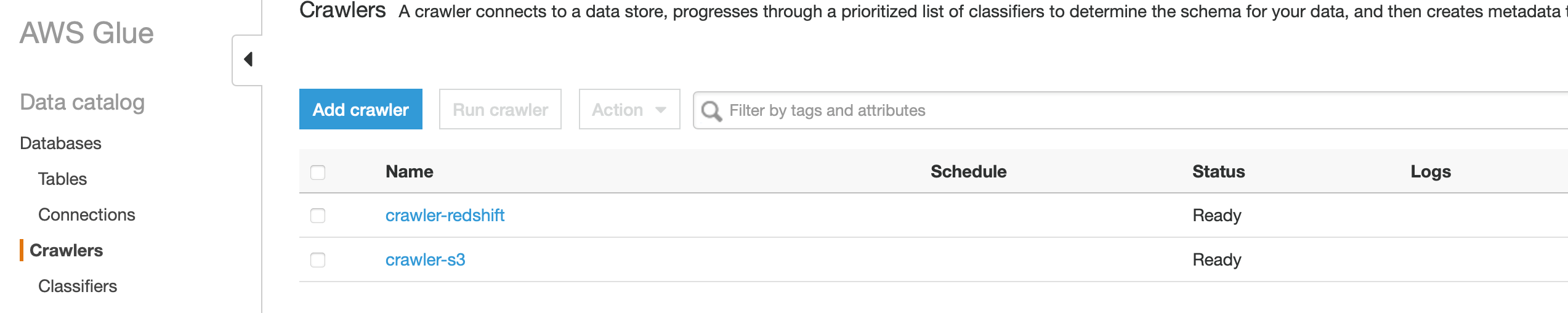

Continuing with AWS Glue

Next task in AWS Glue is creating a Crawler for the source files in AWS S3 and for destination table in Redshift. This will allow us to update data from AWS S3 to Redshift. The destination table needs to be created in Redshift before you can start crawling Redshift.

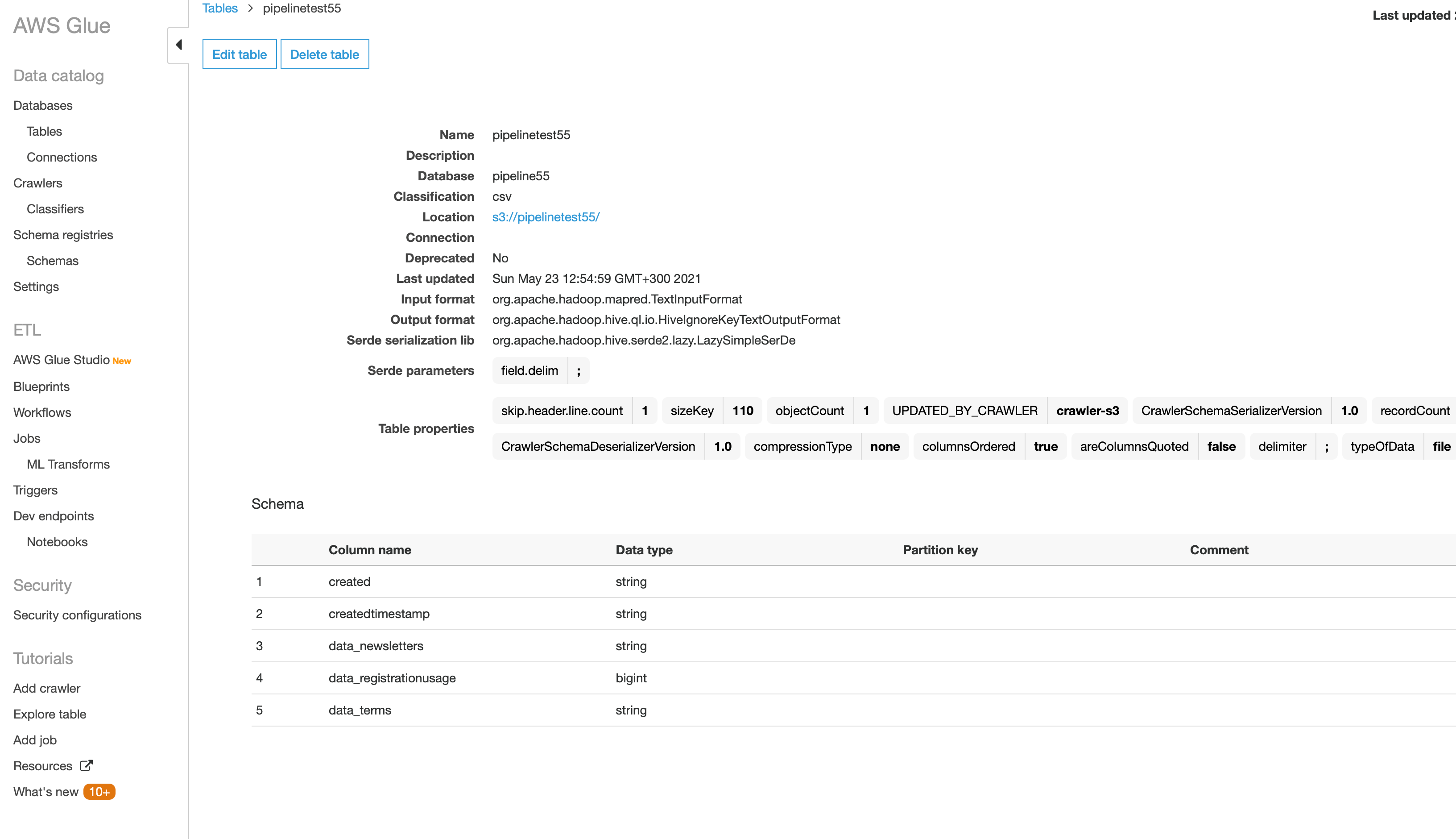

You can use the AWS Glue Data Catalog to see the column structure for files in AWS S3 and also for Redshift.

Wrapping up the pipeline

We now have source (AWS S3) and destination (Redshift) we are now putting these two things together.

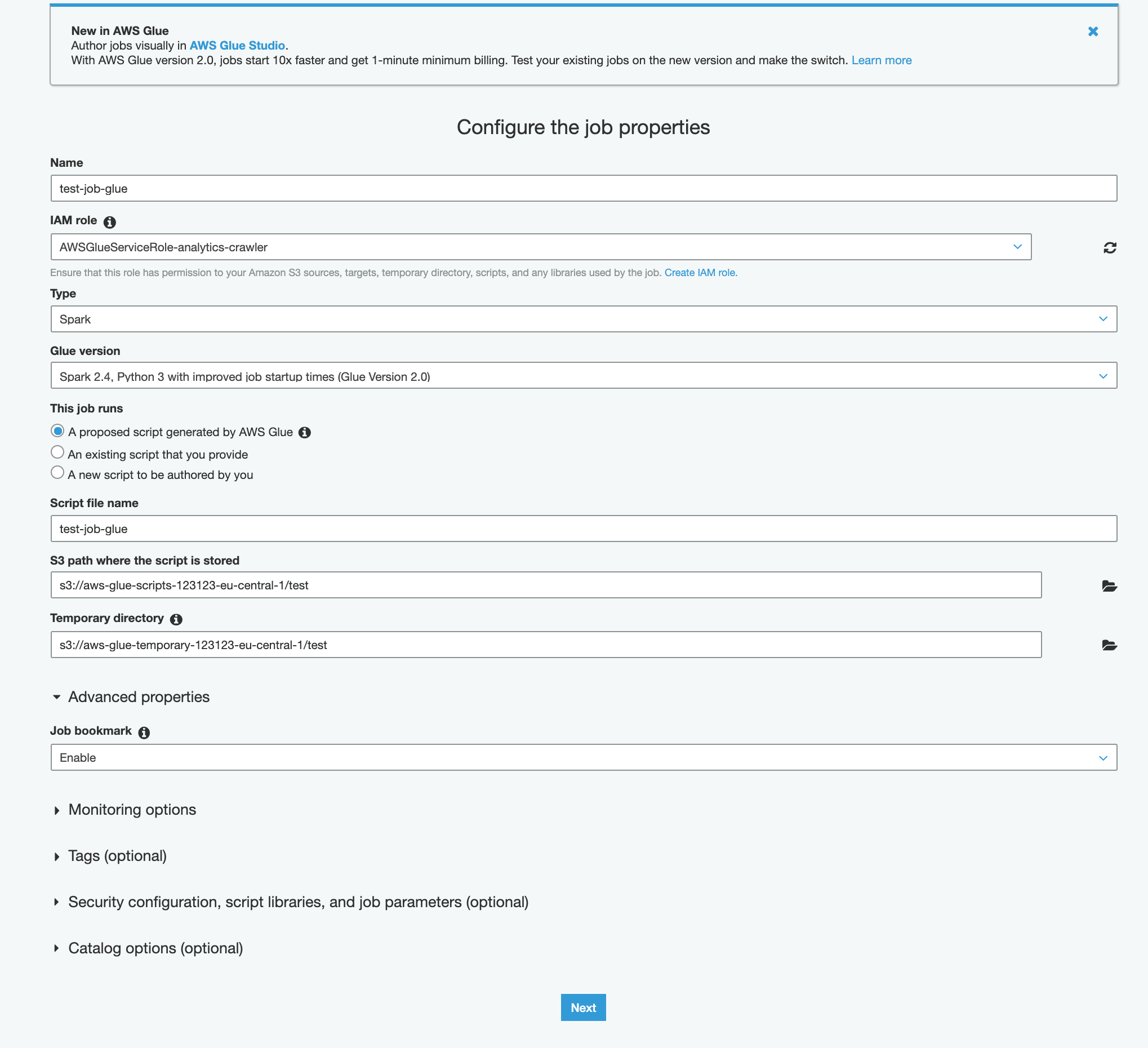

Creating Glue job.

AWS Glue job have default settings that you should change.

- “job bookmark” from disable to enable (remembers what has been put to redshift)

- “number of workers” from 10 to 2 (start low and go bigger if needed)

- “job time” change from two days to 15min

- “number of retries” from 0 to 1.

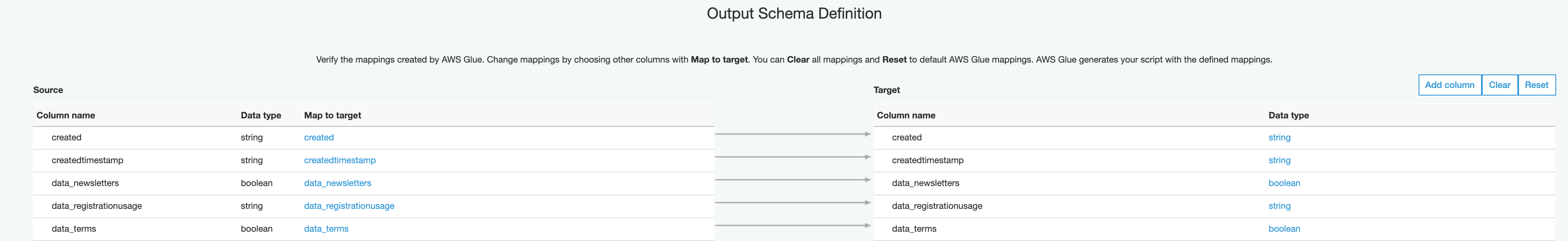

Next phase is selecting the source and destination and then mapping the columns between source and destination. AWS Glue will automatically map source and destination columns based on the name.

After mapping we are able to run the AWS Glue job and when job finishes you are able to query the data in Redshift and example start building dashboards.