Large language models like OpenAI's ChatGPT, Google's Bard, Anthropic Claude, and Meta's LLaMA generate excitement for their potential to support many artificial intelligence (AI) use cases. However, the most effective and innovative future AI systems will likely combine these versatile foundations with more specialized neural networks. These include computer vision for analyzing images, regression models for predicting numerical values, reinforcement learning for optimizing behaviors, and more.

Just as an orchestra combines diverse musical talents, the AI platforms of tomorrow will integrate different machine-learning approaches as modular components led by human expert “conductors.” The future of AI is an orchestra of models, not a solo act.

The key is thoughtfully combining LLMs with other AI capabilities, quality data sets, experts, and processes to create a system of checks and balances. This allows tailored solutions that drive value by increasing the output's quality, safety, and accuracy. LLMs are versatile foundations, but thoughtfully integrating them with broader AI capabilities and infrastructure unlocks their full potential. There are always challenges with any new technology, but by taking a comprehensive approach, these models can enable organizations to make safe strides on their AI journeys.

At CGI, our vision of the future for AI is this ecosystem of models with complementary strengths, working in conjunction with ongoing expert human involvement and oversight, or what we refer to as the “human expert in the loop,” to achieve desired alignment. For example, a generative language model could rapidly synthesize initial content drafting. A logic-driven quality assurance system could help audit and verify critical terms. A specialist content model fine-tuned on the target domain could further refine the language and tone to match an organization’s previous documents.

Selectively combining specialist and generalist AI models in this staged, validated manner under human supervision can enable tailored solutions where each model contributes unique strengths toward a more reliable and trustworthy output. This fusion of diverse models and validations will be critical to safely unlocking the full potential of AI.

Success factors for multi-model AI

Building a flourishing multi-model AI ecosystem requires expertise to orchestrate models, data, infrastructure, and operations into a system of checks and balances. Understanding which complementary AI models to use and what resources are needed to ensure proper governance will help teams focus on responsibly creating AI-led value. With careful planning, and strategic guidance to coordinate all pieces synergistically, organizations can develop such AI environments to drive ethical business impacts.

To achieve this carefully crafted orchestra of moving parts, an AI ecosystem with a robust system of checks and balances requires having the following in place:

|

|

While you can never eliminate biases, you can reduce them by using suitable models and processes, improving your training approach, and increasing the size and diversity of the data set.

Ensuring ethics and security within a healthy AI ecosystem

Ensuring you get AI results that align with your goals and ethics will rely on your AI ecosystem and how you train your models and feed them data to meet your standards. Every organization will have different requirements and tolerance levels.

When it comes to security, there are multiple potential attack vectors related to LLMs and AI models in general. A relatively new threat is from bad actors who want to influence the output of a model by using AI social engineering. Like with humans, LLMs can be susceptible to influence through a carefully selected series of prompts. To counter those threats and avoid having your models share more data than expected, AI models would act a bit like security agents providing oversight and support, just like a supervisor would in a human call center.

Complementing these oversight models, enterprises should consider the following additional techniques:

|

|

Organizations should approach security and privacy early by ensuring their AI ecosystem implements the necessary checks and balances.

In a healthy AI multi-model ecosystem, parallels could be drawn with many aspects of modern human society. So called “Good Guys” will want to retain the use of specialized and generalized models to counteract the so-called “Bad Guys” who will want to circumvent and corrupt the models for their own negative purposes. In a lot of ways, the race is already underway.

Preventing false data with “human experts in the loop”

A key challenge with LLMs and other generative AI models is they can produce unsupported or illogical information, similar to how humans may inaccurately recollect facts. Such “hallucinations” result from how deep learning networks operate.

Describing this simply, the models take elements of data sets, like words, and turn them into tokens, which are basic units of text or code. Next, based on the tokens’ relationships in a given context, the tokens are translated into multidimensional vectors, which are collections of numbers. When decoded back into natural language for a given prompt, the models generate the most statistically likely text based on the model's training, which may lead to false or irrational content.

Organizations can mitigate the occurrence of hallucinations with a variety of techniques and approaches. Examples include:

- Bringing human judgment directly into the loop: Better human-in-the-loop tools for content validation, flagging anomalies, and iterative training by AI experts can allow for real-time error correction, feedback, and improved model training.

- Adopting training techniques and process improvements: Reinforcement learning from human feedback, adversarial training, and multi-task learning can improve factual grounding by exposing the model to various scenarios and responses curated by experts. In addition, augmented data sets, confidence scoring, and uncertainty quantification can ensure the model has a robust training foundation and can assess its performance.

- Using novel model architectures: Incorporating external knowledge stores and modular logic-driven components can provide additional layers of information and decision-making to help the AI produce more accurate outputs.

- Implementing multiple foundation models: In a healthy AI ecosystem, using multiple models to compare outputs and reduce outliers can add a layer of cross-validation, enhancing the overall accuracy of the results.

- Incorporating causality and reasoning into model objectives: Beyond predictive accuracy, adding causality and reasoning can give the AI model a deeper understanding of the data, reducing the chance of making factually incorrect conclusions.

- Focusing on hybrid approaches: Combining deep learning neural techniques with symbolic logic (more traditional algorithms like blockchain) and knowledge representations can improve accuracy and alignment, reduce training time, and speed up time to market through reuse.

Although techniques such as those listed above will help reduce illogical results, LLMs will continue to be inherently fallible, just like human communication can sometimes be. This means that AI ecosystems will still require ongoing human checks and balances for the foreseeable future. Rather than AI replacing human workforces, organizations seeking to leverage the power of AI fully will focus on training programs that equip their employees to learn to use these technologies.

With proper implementation, AI promises to augment and enable employees to focus more time on high-value tasks that leverage human strengths and interactions. For example, teachers could spend more one-on-one time engaging with students (see "2-Sigma Problem," 1984 by Benjamin Bloom). Such productivity enhancements could help address talent shortages impacting many organizations. However, the transition may be challenging for some roles and require retraining and support.

With a thoughtful approach in a healthy AI multi-model ecosystem of checks and balances, organizations can work to maximize benefits and mitigate displacements as AI and human workforces evolve together, rather than compete against each other.

Advancing the healthy AI ecosystem

Just as a symphony orchestra combines the talents of diverse musicians into a harmonic whole, realizing AI's full potential requires thoughtfully integrating technologies, data, and human expertise.

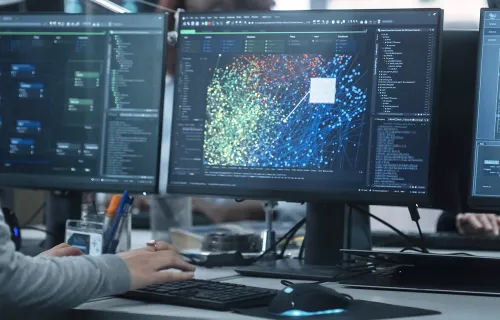

At CGI, we recognize that the most aligned AI-led output is achieved through this fusion. Our CGI PulseAI platform exemplifies the building of robust ecosystems to enable different AI models to work together under human supervision, like a conductor guiding the different sections of an orchestra.

Generalist language models provide a solid foundational melody atop which specialist computer vision and predictive models can layer complementary parts. By orchestrating these diverse capabilities — combining general and specialized models, using quality data assets, and enabling collaboration between AI systems and people through human-centered design — tailored solutions emerge for each business challenge.

The future of AI lies in this harmonious synthesis of models, data, and people to enable solutions that drive measurable value. Please reach out to discuss our perspectives on the evolving landscape of multi-model AI and best practices for implementation.

Back to top