For centuries the financial services sector has pioneered enhanced services and engaging customer experiences (CX) through innovation. In its growing popularity, artificial intelligence (AI) is further revolutionizing the industry with advancements in machine learning, predictive analytics, and natural language processing. However, as financial institutions (FIs) implement these technologies, the importance of an ethical framework, trust, and explainability in AI becomes paramount. FIs are the custodians of more sensitive data and greater customer reliance than most, so what guardrails are needed, and why do ethics, trust and explainability matter?

Constraints for protective measures

As FIs implement AI, they recognize the need for incorporating built-in constraints into these solutions. Building responsible AI (RAI) includes focusing early in the process on developing an ethical framework, ensuring explainability (XAI) and designing to embed trustworthiness. In short, stakeholders should be able to understand how a model reached an output to understand and trust its conclusions are interwoven, that customer permission is obtained (where appropriate), that outputs are ethically sound, and that all AI inputs and outputs follow company policies as well as state and federal regulations.

Solid ethical AI framework foundation

Responsible AI is about ensuring the data and processes used to train AI don’t perpetuate or introduce bias or have any other adverse effect on society. It includes several elements, which you can read more about in our blog, Embracing responsible AI in the move from automation to creation. Ethics in AI entails ensuring that during the training phase, data and technology are utilized to embed fairness, transparency, and respect for users' rights into the decision-making processes of AI systems.

An ethical decision framework sets out the relevant considerations teams should study when working at each step of the AI workflow. For example:

- What AI is being used for

- Integrity and viability of data

- Data literacy understanding and ongoing training

- Accuracy and validity of models

- Transparency

- Interpretability of algorithms and accountability structures

Without an Ethical AI Framework organizations could encounter serious consequences like increasing risk for introducing unintended bias, discrimination, or even violating the privacy of their customers. Being transparent about the origins of the data, as well as what and how data is used helps build customer trust and understanding (a key imperative). Transparency, in the form of opt-out permissions, will help customers gain trust while giving them control over how their data is collected and used. While data is at the heart of the power of AI, transparency with customers is vital to building and maintaining their trust.

Explainability (XAI) impacts on customers

Trust matters as it directly impacts customer engagement and loyalty. To establish trust, begin with accurate data and develop the ability to understand and analyze AI-generated decisions or predictions. During a speech given by Acting Comptroller of the Currency, Michael HSU in June 2023, he discussed how innovation can move fast, leverage rapid prototyping, and fail fast. However, he cautioned that in the financial services sector, “the responsible approach to innovation is the better way.” He went on to state that trust should be considered at every point from start to finish to maintain a trusted, safe, sound, and fair AI tool.

To build trust, start with good data, then build the ability to understand and interpret AI-driven decisions or predictions. For example, in an AI-based lending tool, the ability to explain why a particular loan application was approved or denied not only builds customer and employee confidence but also helps in the audit process when one can show how a decision was derived (this is where the human imperative is embedded). Additionally, providing clear, comprehensive insights into the AI model training and reinforcement process, including data sources, algorithms and other components, will help ensure biases are not inadvertently introduced. XAI is fundamental for regulatory compliance, and regulators will require that FIs explain how their AI systems make decisions to ensure fairness, transparency, and the absence of bias.

Trust with regulators

Data privacy

With state and federal governments racing to enact data privacy protections, it’s imperative to build safeguards into AI data models now. In the U.S., individual states are working to enact data privacy standards. At the federal level, the proposed American Data Privacy Protection Act* (ADPPA) follows other consumer privacy laws and is designed to standardize data privacy. The measure highlights the use or transfer of covered data as being “reasonably necessary and proportionate to provide a service requested by the individual.” Key elements of the Act include the following:

- Transparency (disclosure of data collected, use, retention, etc.)

- Consumer control and consent (consumer rights over their data)

- Data security

- Small and medium-sized businesses requirements

- Enforcement (FTC)

- Preemption (preempts state laws covered by the provisions)

Artificial intelligence

Regulators are also exploring AI-related topics like responsible AI, bias, fairness, consumer protections, explainability, and cybersecurity protections. A recently released executive order calls for safe, secure, trustworthy AI systems through the development of new standards and tools. One such tool is the National Institute of Standards and Technology (NIST) Artificial Intelligence Risk Management Framework 1.0. This framework was developed by scientists and technology experts, taking into account public comments from over 200 AI stakeholders. The framework is designed to promote trust, reduce biases, and protect the privacy of individuals. Released in January 2023, it is currently voluntary, but it provides a foundation for potential future regulations regarding the responsible use of AI.

The primary objective of the executive order and the AI Risk Framework is to protect consumers by ensuring they are not being discriminated against purposefully or unknowingly. Additionally, with a human involved in reviewing decisions and ensuring fairness, as well as through the guidance of good internal policies, protecting consumers and gaining trust can be more easily achieved.

Building on trust, the human imperative

In financial services, trust is essential because it directly impacts customer engagement and satisfaction. A lack of trust may lead to customers perceiving the system as unfair or unreliable, affecting the institution’s reputation, customer loyalty, and retention.

Trustworthy AI is designed with human collaboration as the preferred combination, which follows the “trust but verify” design. A recent Yahoo Finance article shows a Yahoo Finance-Ipsos poll indicating higher levels of trust in an AI financial advisor among younger generations. However, while younger generations have higher rates of acceptance of AI financial advice, majorities of all generations still express a preference for human involvement in financial advice.

The result: ethical, trustworthy, explainable AI

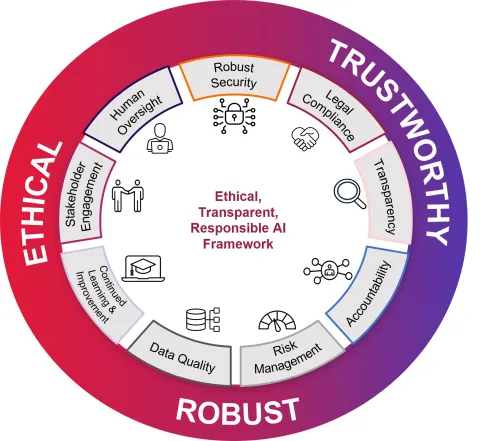

AI guardrails must prioritize ethics and explainability to build trust with all stakeholders. This ensures the development of well-designed, responsible AI tools. Financial institutions (FIs) can deploy AI technologies responsibly and reap the benefits by employing transparent models and fostering a culture of ethical AI use. CGI's Responsible AI Framework champions this approach: We promote fairness through ethical design, ensure trustworthiness with explainable models, and guarantee robustness through rigorous development, testing, and operations. Privacy and security remain paramount and aligned with regulations. This approach enhances customer experience (CX), boosts loyalty, satisfies regulators, and drives business growth and success.

CGI is a trusted artificial intelligence (AI) expert, helping clients understand and deliver responsible AI. We combine our end-to-end capabilities in data science and machine learning with deep domain knowledge and technology engineering skills to generate new insights, experiences, and business models powered by AI.

Connect with us to learn more about how CGI is committed to helping organizations with the development of ethical, explainable, trustworthy AI.

* As of the date of this blog ADPPA has not been enacted into law.